reading-notes

This Repo required for Asac labs class 2

Project maintained by ManarAbdelkarim Hosted on GitHub Pages — Theme by mattgraham

Web Scraping

Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites. The web scraping software may directly access the World Wide Web using the Hypertext Transfer Protocol or a web browser. While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a bot or web crawler. It is a form of copying in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later retrieval or analysis.

BeautifulSoup

For web scraping we are going to use the very popular Python library called BeautifulSoup.

1. Getting Started:

pip install beautifulsoup4

or

poetry add beautifulsoup4

2. Inspecting

The first step in scraping is to select the website you wish to scrape data from and inspect it.

To inspect a website right click anywhere on the page and choose ‘Inspect Element’ / ‘View Page Source’

3. Parsing

Now we can begin parsing the webpage and searching for the specific elements we need using BeautifulSoup. For connecting to the website and getting the HTML we will use Python’s urllib. Let us import the required libraries-

from urllib.request import urlopen

from bs4 import BeautifulSoup

3a. Get the url-

url = "https://www.bbc.com/sport/football/46897172"

3b. Connecting to the website-

try:

page = urlopen(url)

except:

print("Error opening the URL")

3c. Create a BeautifulSoup object for parsing-

soup = BeautifulSoup(page, 'html.parser')

4. Extracting the required elements:

We now use BeautifulSoup’s soup.find() method to search for the tag

content = soup.find('div', {"class": "story-body sp-story-body gel- body-copy"})

We now iterate through content to find all the <p> (paragraph) tags in it to get the entire body of the article.

article = ''

for i in content.findAll('p'):

article = article + ' ' + i.text

5. Saving the parsed text:

We can save the information we scraped in a .txt or .csv file.

with open('scraped_text.txt', 'w') as file:

file.write(article)

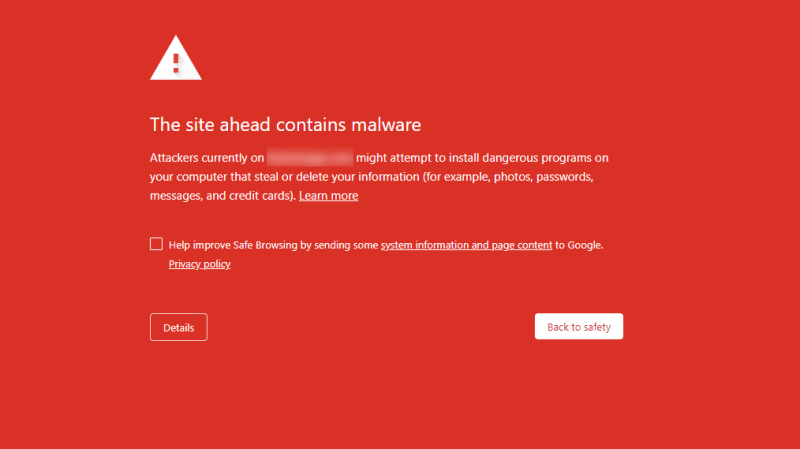

How to scrape websites without getting blocked?

- Respect Robots.txt

-

Make the crawling slower, do not slam the server, treat websites nicely

- Do not follow the same crawling pattern

- Make requests through Proxies and rotate them as needed

- Rotate User Agents and corresponding HTTP Request 6. Headers between requests

- Use a headless browser like Puppeteer, Selenium or Playwright

- Beware of Honey Pot Traps

- Check if Website is Changing Layouts

- Avoid scraping data behind a login

- Use Captcha Solving Services